Prior to the deployment of the omnipotent system, AI companies are encouraged to replicate the safety assessments that formed the basis of Robert Oppenheimer’s initial nuclear test.

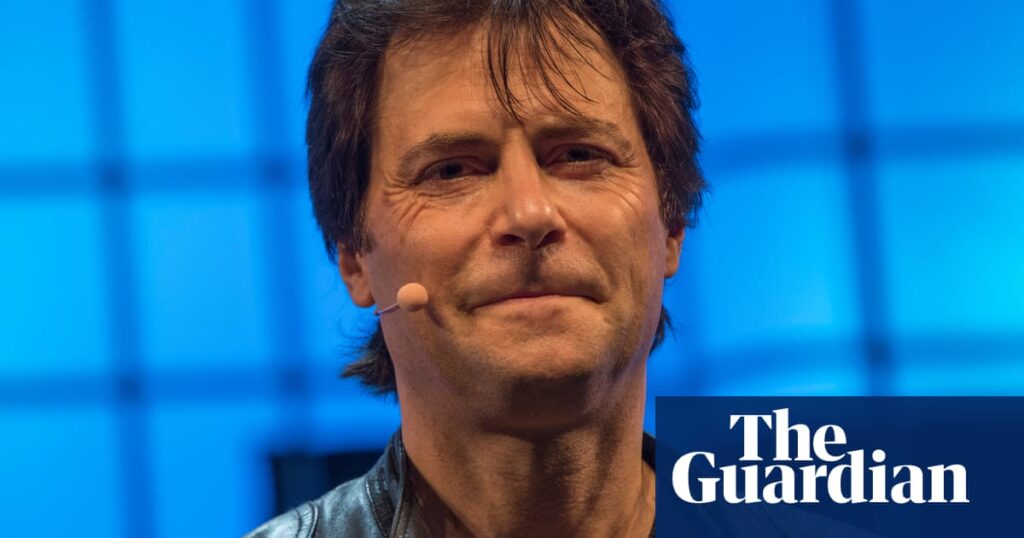

Max Tegmark, a prominent advocate for AI safety, conducted analyses akin to those performed by American physicist Arthur Compton before the Trinity test, indicating a 90% likelihood that advanced AI could present an existential threat.

The US government went ahead with Trinity in 1945, after providing assurances that there was minimal risk of the atomic bomb igniting the atmosphere and endangering humanity.

In a paper published by Tegmark and three students at the Massachusetts Institute of Technology (MIT), the “Compton constant” is suggested for calculation. This is articulated as the likelihood that omnipotent AI could evade human control. Compton mentioned in a 1959 interview with American author Pearlback that he approved the test after evaluating the odds for uncontrollable reactions to be “slightly less” than one in three million.

Tegmark asserted that AI companies must diligently assess whether artificial superintelligence (ASI)—the theoretical system that surpasses human intelligence in all dimensions—can remain under human governance.

“Firms developing superintelligence ought to compute the Compton constant, which indicates the chances of losing control,” he stated. “Merely expressing a sense of confidence is not sufficient. They need to quantify the probability.”

Tegmark believes that achieving a consensus on the Compton constant, calculated by multiple firms, could create a “political will” to establish a global regulatory framework for AI safety.

A professor of physics at MIT and an AI researcher, Tegmark is also a co-founder of The Future of Life Institute, a nonprofit advocating for the secure advancement of AI. The organization released an open letter in 2023 calling for a pause in the development of powerful ASI, garnering over 33,000 signatures, including notable figures such as Elon Musk and Apple co-founder Steve Wozniak.

This letter emerged several months post the release of ChatGPT, marking the dawn of a new era in AI development. It cautioned that AI laboratories are ensnared in “uncontrolled races” to deploy “ever more powerful digital minds.”

Tegmark discussed these issues with the Guardian alongside a group of AI experts, including tech industry leaders, representatives from state-supported safety organizations, and academics.

The Singapore consensus, outlined in the Global AI Safety Research Priority Report, was crafted by distinguished computer scientist Joshua Bengio and Tegmark, with contributions from leading AI firms like OpenAI and Google DeepMind. Three broad research priority areas for AI safety have been established: developing methods to evaluate the impacts of existing and future AI systems, clarifying AI functionality and designing systems to meet those objectives, and managing and controlling system behavior.

Referring to the report, Tegmark noted that discussions surrounding safe AI development have regained momentum following remarks by US Vice President JD Vance, asserting that the future of AI will not be won through mere hand-raising and safety debates.

Tegmark stated:

Source: www.theguardian.com