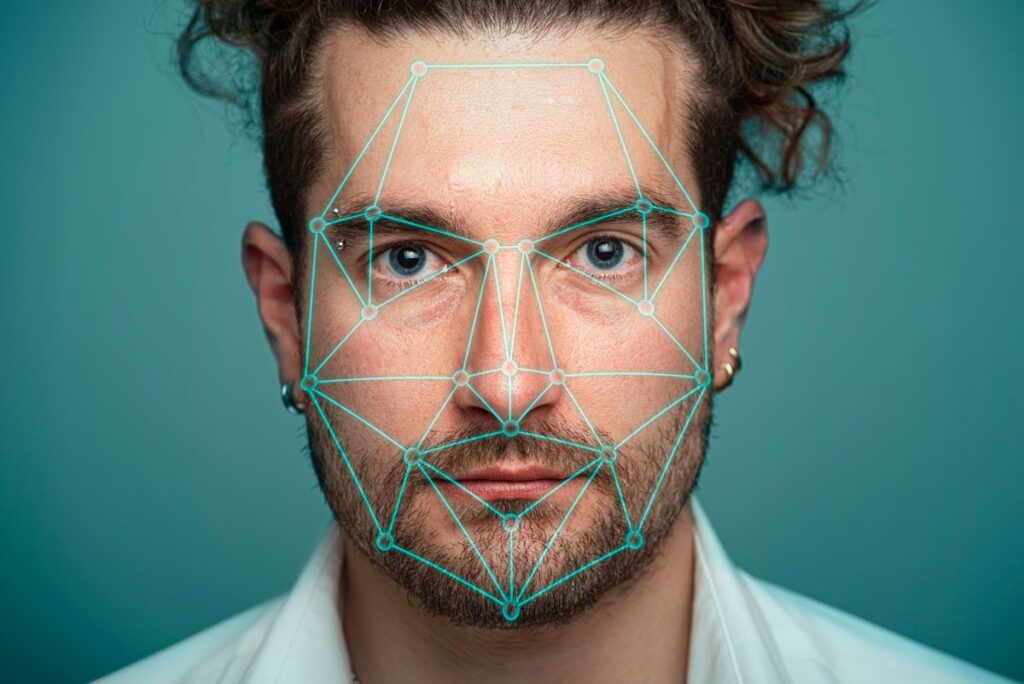

Deepfake technology—a method for digitally altering a person’s face or body to impersonate someone else—is advancing at an alarming rate.

This development is discussed in a recent study published in the journal Frontiers of Imaging, which facilitates the creation of some of the most cutting-edge deepfake detectors. These detectors analyze a consistent pattern of blood flow across the face, which has proven to be an unreliable method, complicating the search for harmful content.

Deepfakes are typically generated from “driving videos,” which utilize real footage that artificial intelligence modifies to completely alter a person’s representation in the video.

Not all applications of this technology are harmful; for instance, smartphone apps can age your face or transform you into a cartoon character, showcasing the same underlying techniques for innocent fun.

However, at their most malicious, deepfakes can be used to create non-consensual explicit content, disseminate false information, and unjustly implicate innocent individuals.

In this study, researchers utilized cutting-edge deepfake detectors based on medical imaging methods.

Remote Photoplethysmography (RPPP) measures heartbeats by detecting minute variations in the blood flow beneath the skin, similar to pulse oximeters used in healthcare settings.

The accuracy of the detector is remarkable, with only a 2-3 beats per minute variance when compared to electrocardiogram (ECG) records.

It was previously believed that deepfakes couldn’t accurately replicate these subtle indicators enough to fool RPPP-based detectors, but that assumption has proven incorrect.

“If the driving video features a real person, this information can now be transferred to deepfake videos,” stated Professor Peter Eisert, a co-author of the research, in an interview with BBC Science Focus. “I think that’s the trajectory of all deepfake detectors. As deepfakes evolve, detectors that were once effective may soon become ineffective.”

During testing, the team found that the latest deepfake videos often displayed a remarkably realistic heartbeat, even when deliberately included.

Does this mean we are doomed to never trust online videos again? Not necessarily.

The Eisert team is optimistic that their new detection approach will prove effective. Rather than simply measuring overall pulse rates, future detectors may track detailed blood flow dynamics across the face.

“As the heart beats, blood circulates through the vessels and into the face,” Eisert explained. “This flow is then distributed throughout the facial region, and the movement has a slight time delay that can be detected in genuine footage.”

Ultimately, however, Eisert is skeptical about winning the battle solely with deepfake detection. Instead, he advocates for the use of “digital fingerprints” (encrypted evidence that video content remains untampered) as a more sustainable solution.

“I fear there will come a time when deepfakes are incredibly difficult to detect,” Eisert remarked. “I personally believe that focusing on technologies that verify the authenticity of footage is more vital than just distinguishing between genuine and fake content.”

About our experts

Peter Isert is the head of the Vision & Imaging Technologies Department and chair of visual computing at Humboldt University in Germany. A professor of visual computing, he has published works in over 200 conferences and journals, and also serves as an associate editor for the Journal of Image and Video Processing while sitting on the editorial committee for the Journal of Visual Communication and Image Representation.

Read more:

Source: www.sciencefocus.com