Apple has unveiled an extensive array of iOS accessibility features aimed at supporting individuals with visual and auditory impairments, challenging the perception that Apple’s hardware pricing makes accessibility costly.

Ahead of Global Accessibility Awareness Day on Thursday, May 15th, Apple revealed its upcoming accessibility features, which will debut later this year. These include live captions, personal audio replication, tools for reading enhancement, upgraded Braille readers, and “nutrition labels.”

The nutrition labels mandate developers to outline the accessibility features available within their apps, such as voiceover, voice control, or large text options.

Sarah Herrlinger, senior director of Apple’s Global Accessibility Policy and Initiative, expressed to Guardian Australia her hope that the nutrition label will empower developers to create more accessibility options in the future.

“[It] gives them a real opportunity to understand what it means to be accessible and why they should pursue it and expand upon it,” she remarked.

“By doing this, we’re giving them the chance to evolve. There might be aspects they are already excelling in.”

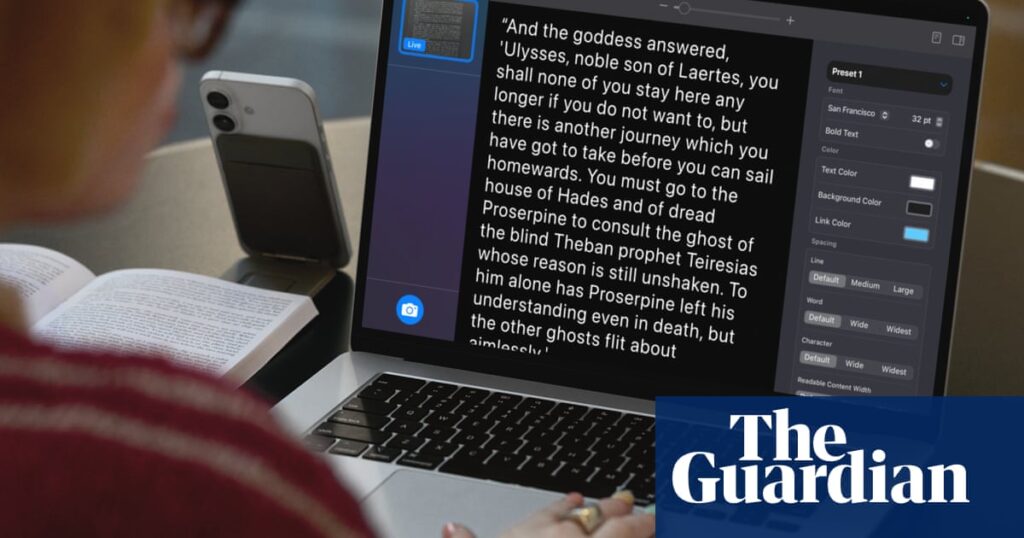

The company has also enhanced its Magnifier app, bringing it to Mac, enabling users to utilize their camera or connected iPhone to zoom in on screens or whiteboards during lectures to read presentations.

The updated Braille functionalities allow for note-taking with Braille screen input or compatible Braille devices, along with calculations using Nemeth Braille, a standardized Braille code used in mathematics and science.

Apple’s new live listening accessibility features enable your iPhone or iPad to function as a microphone and transmit sounds to your hearing device. Photo: Apple

The enhanced personal audio feature allows users to replicate their voice using just 10 phrases, improving on previous models that demanded 150 phrases and required an overnight wait for the model to be processed. Apple assures that this voice replication will remain on the device unless password-protected and backed up to iCloud, where it will be encrypted, minimizing the risk of unauthorized use.

Herrlinger noted that as advancements in artificial intelligence have emerged at Apple, the accessibility team has actively sought ways to incorporate these innovations into their initiatives.

“We have been collaborating closely with the AI team over the years, ensuring we leverage the latest advancements as new opportunities arise,” she stated.

Google’s Android operating system offers several comparable accessibility features, such as live captions, Braille readers, and magnifying tools. New AI-supported features were announced this week.

Apple’s live caption feature, Live Listen, allows users to utilize AirPods to enhance audio in settings like lecture halls. In addition to live captions, Apple has recently introduced functionality that enables individuals with hearing loss to utilize AirPods as hearing aids.

While Apple’s hardware is typically viewed as high-end in the smartphone market, Herrlinger disputes the notion that the company’s accessibility options come at a premium, emphasizing that these features are built into the operating system at no additional cost.

“It’s available out of the box without extra charges,” she asserted.

“Our aim is to develop various accessibility features because we understand that each individual’s experience in the world is unique. Different people utilize various accessibility tools to aid them, whether it’s a single challenge or multiple.”

Herrlinger mentioned that it would be more cost-effective for customers to access multiple features on key devices.

“Now, they’re all integrated into a single device that has the same price for everyone,” she remarked. “Thus, in our view, it’s about making accessibility more democratic within the operating system.”

Chris Edwards, Head of Corporate Affairs at Vision Australia, commended the company for embedding accessibility features into their products and operating systems, highlighting his own experience as a blind individual with a Seeing Eye Dog.

“I believe that interpreting images through the new features enhances accessibility for all. The ability to interpret images in real-time is a significant step towards improving lives,” he stated.

“The new accessibility features seem particularly beneficial for students in educational settings, reinforcing that Braille remains a crucial mode of communication.”

Source: www.theguardian.com