“Where did CrowdStrike go wrong?” is, if anything, a slightly overly generalized question.

You can also think about it the other way around: if you push an update to every computer on your network at the same time, by the time you find a problem, it’s too late to contain the impact. Alternatively, with a phased rollout, the update is pushed to users in small groups, usually accelerating over time. If you start updating 50 systems at once and then they all immediately lose connection, you hope you notice the problem before you update the next 50 million systems.

If you don’t do a staged rollout, you need to test the update before pushing it to users. The extent of pre-release testing is usually up for debate; there are countless configurations of hardware, software, and user requirements, and your testing regime must narrow down what’s important, and hope that nothing is overlooked. Thankfully, if 100% of computers with the update installed experience crashes and become inoperable until you manually apply a tedious fix, it’s easy to conclude that you didn’t test enough.

If you’re not doing a staged rollout and testing the update before it ships, you need to make sure that: Not broken.

Broken

In CrowdStrike’s defense, I can understand why this happened. The company offers a service called “endpoint protection,” which if you’ve been in the Windows ecosystem for a few years, might be easiest to think of as antivirus. It’s built for the enterprise market, not the consumer market, and not just protects against common malware, but also tries to prevent individual computers used by companies from gaining a foothold on the corporate network.

This applies not only to PCs used by large corporations that need to provide every employee with a keyboard and mouse, but also to any other business with large amounts of cheap, flexible machines. If you left your house on Friday, you know what that means: advertising displays, point-of-sale terminals, and self-service kiosks were all affected.

The comparison is relevant because CrowdStrike is in a space where speed is crucial. The worst-case scenario, at least until last week, is a ransom worm like WannaCry or NotPetya, malware that not only does significant damage to infected machines but also spreads automatically in and out of corporate networks. So its first line of defense operates quickly: Rather than waiting for a weekly or monthly release schedule for software updates, the company pushes out files daily to address the latest threats to the systems it protects.

Though limited, even a phased rollout could cause real damage. WannaCry destroyed many NHS computers during the few hours it spread unchecked, before being accidentally halted by British security researcher Marcus Hutchins while trying to figure out how it worked. In this scenario, a phased rollout could result in loss of life. Delays in testing could be even more costly.

That means updates shouldn’t cause this kind of problem: rather than new code that runs on each machine, updates are more like dictionary updates that tell already-installed CrowdStrike software what new threats to look out for and how to recognize them.

At the loosest level, you can think of it as something like this article: You’re probably reading it through some application, like a web browser, an email client, or the Guardian app. (If you’ve arranged for someone to print this and deliver it to you with your morning coffee, congratulations!) We haven’t done a staged rollout or full testing of the article, because nothing would happen there.

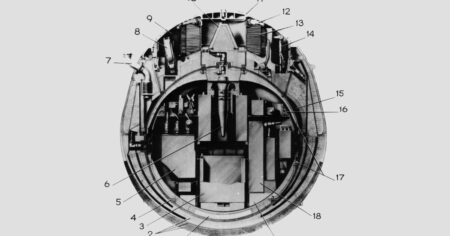

Unfortunately, the update pushed out on Friday actually did something. High-level technical details remain unclear, and until CrowdStrike reveals the full details, we’ll just take their word for it. The update, which was meant to teach the system how to detect a specific type of cyberattack that had already been seen in the wild, actually “introduced a logic error, causing the operating system to crash.”

I’ve been covering this sort of thing for over a decade now, and my guess is that this “logic error” boils down to one of two things: Either an almost incomprehensible failure condition occurs in one of the most complex systems mankind has ever built, causing a catastrophic event through an almost unthinkable combination of bad luck, or someone does something incredibly stupid.

Sometimes there are no classes

There have been a lot of comments over the past few days.

After newsletter promotion

-

This is an inevitable evil that results from the concentration of power in the technology sector in just a few companies.

-

This is an inevitable consequence of the EU prohibiting Microsoft from restricting antivirus companies’ ability to tamper with basic levels of Windows.

-

This is the inevitable harm of cybersecurity regulation that focuses more on checking boxes than on actual security.

-

This wasn’t a security issue because no one was hacked – it was just a bug.

None of it worked. CrowdStrike, despite the disruption it caused, doesn’t wield much power. It’s one of the big players in the space, but it’s installed on only about 1% of PCs. Microsoft says: They claim that the failure happened only because of regulations.Meanwhile, in the alternative where third-party security companies can’t operate on Windows, with Microsoft setting itself up as the only line of defense, it looks like we’ll be in a world where the first big failure actually affects 100% of PCs.

Cybersecurity regulations have actually benefited companies that have adopted CrowdStrike, making complicated certification processes into a simple checkbox check, and maybe that’s a good thing: “Buy a product to be safe” is the only reasonable request for the vast majority of companies, and CrowdStrike has delivered, except for that one unfortunate time.

But unfortunate or not, it was definitely a security issue. The golden triangle of information security has three goals: confidentiality (are the secrets kept secret?), integrity (is the data correct?), and availability (can the system be used?). CrowdStrike could not maintain availability, which meant they could not protect their customers’ information security.

In the end, the only lesson I can take comfort in is that this is going to happen more. We’ve managed so well with so many of our society’s failures that the ones that hit us from now on will be more unexpected, more severe, and less prepared for. Just as a driver can become so confident in their cruise control that they lose control right before an accident, we’ve managed to make catastrophic IT failures so rare that recovering from them is a marathon effort.

Yay?

The Wider TechScape

-

“A complete river of rubbish”: Josh Taylor of The Guardian Australia Facebook and Instagram Algorithms The blank account fueled sexism and misogyny.

-

Is the world’s largest search engine broken? Tom Faber asks Google It is losing momentum.

-

Is this the end? The Story of Craig Wright? Post The Court’s Full Decision Post on your Twitter feed that you feel like the last decade of your career is final.

-

Parents have even more reason to worry, as AI technology overwhelms capture efforts. Child Abuser.

-

and Roblox Back in the spotlight Child sexual abuse failureCritics say the company’s privacy stance makes things worse.

Source: www.theguardian.com