Google has temporarily blocked a new artificial intelligence model that generates images of people after it depicted World War II German soldiers and Vikings as people of color.

The company announced that its Gemini model would be used to create images of people after social media users posted examples of images generated by the tool depicting historical figures of different ethnicities and genders, such as the Pope and the Founding Fathers of the United States. announced that it would cease production.

“We are already working to address recent issues with Gemini's image generation functionality. While we do this, we will pause human image generation and re-release an improved version soon. “We plan to do so,” Google said in a statement.

Google did not mention specific images in its statement, but examples of Gemini's image results are widely available on X, along with commentary on issues surrounding AI accuracy and bias. 1 former Google employee “It was difficult to get Google Gemini to acknowledge the existence of white people,” he said.

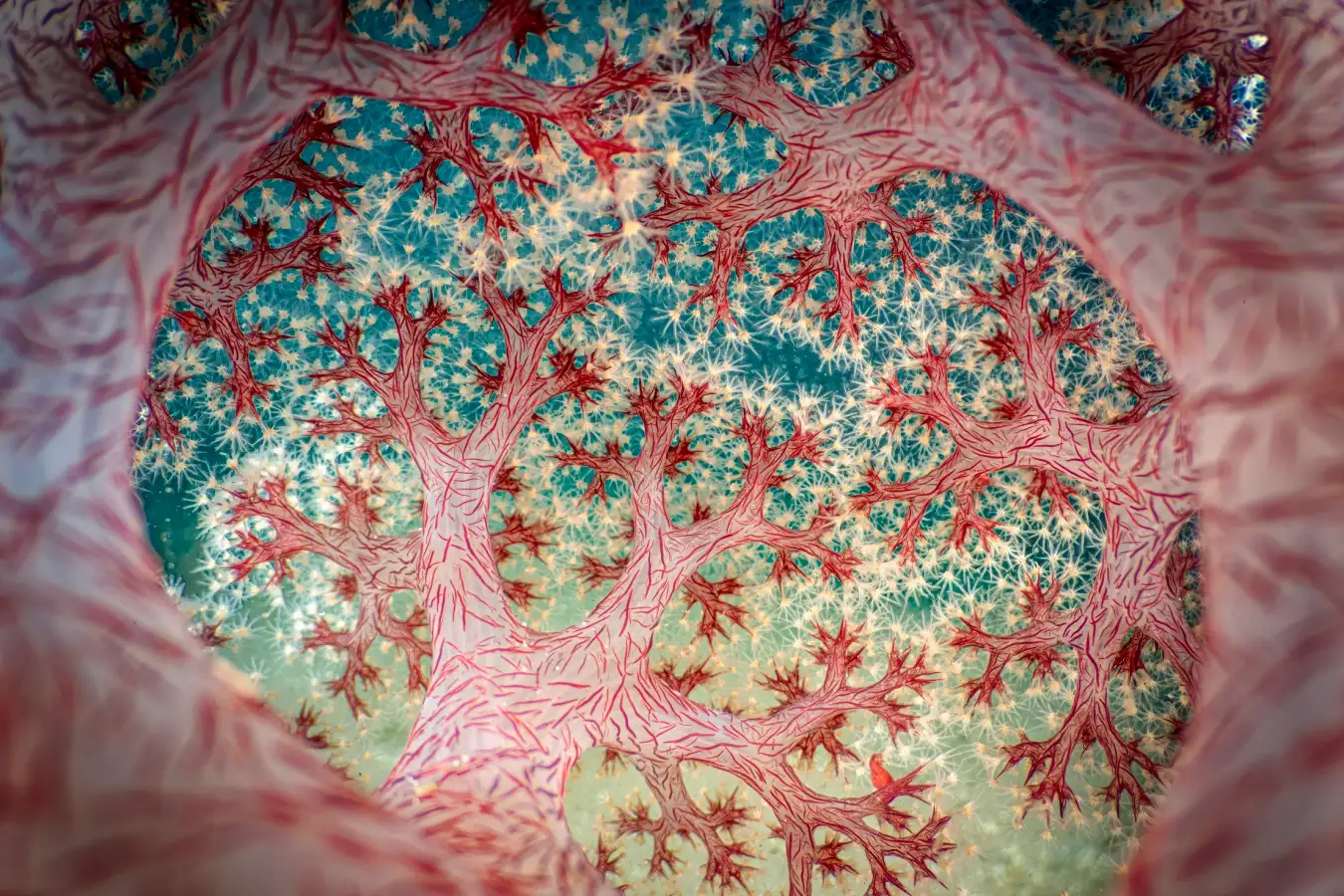

1943 illustration of German soldier Gemini. Photo: Gemini AI/Google

Jack Krawczyk, a senior director on Google's Gemini team, acknowledged Wednesday that the model's image generator (not available in the UK and Europe) needs tweaking.

“We are working to improve this type of depiction immediately,” he said. “His AI image generation in Gemini generates a variety of people, which is generally a good thing since people all over the world are using it. But here it misses the point.”

We are already working to address recent issues with Gemini's image generation capabilities. While we do this, we will pause human image generation and plan to re-release an improved version soon. https://t.co/SLxYPGoqOZ

— Google Communications (@Google_Comms) February 22, 2024

In a statement on X, Krawczyk added that Google's AI principles ensure that its image generation tools “reflect our global user base.” He added that Google would continue to do so for “open-ended” image requests such as “dog walker,” but added that response prompts have a historical trend. He acknowledged that efforts are needed.

“There's more nuance in the historical context, and we'll make further adjustments to accommodate that,” he said.

We are aware that Gemini introduces inaccuracies in the depiction of some historical image generation and are working to correct this immediately.

As part of the AI principles https://t.co/BK786xbkeywe design our image generation capabilities to reflect our global user base and…

— Jack Klotzyk (@JackK)

February 21, 2024

Reports on AI bias are filled with examples of negative impacts on people of color.a Last year's Washington Post investigation I showed multiple examples of image generators show prejudice Not just against people of color, but also against sexism. Although 63% of U.S. food stamp recipients are white, the image generation tool Stable Diffusion XL shows that food stamp recipients are primarily non-white or dark-skinned. It turned out that there was. Requesting images of people “participating in social work” yielded similar results.

Andrew Rogoiski, from the University of Surrey's Institute for Human-Centered AI, said this is “a difficult problem to reduce bias in most areas of deep learning and generative AI”, and as a result there is a high likelihood of mistakes. said.

“There is a lot of research and different approaches to eliminating bias, from curating training datasets to introducing guardrails for trained models,” he said. “AI and LLM are probably [large language models] There will still be mistakes, but it is also likely that they will improve over time. ”

Source: www.theguardian.com