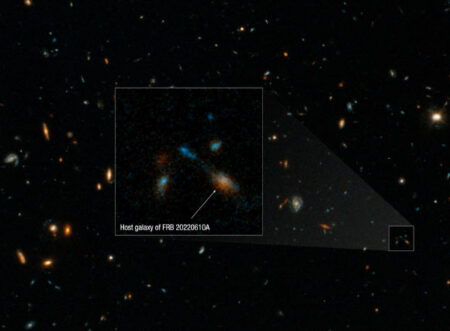

The evolving experience of young people on the internet. Linda Raymond/Getty Images

In 2025, numerous countries will implement new internet access restrictions aimed at protecting children from harmful content, with more expected to follow in 2026. However, do these initiatives genuinely safeguard children, or do they merely inconvenience adults?

The UK’s Online Safety Act (OSA), which took effect on July 25, mandates that websites prevent children from accessing pornography or content that promotes self-harm, violence, or dangerous activities. While intended to protect, the law has faced backlash due to its broad definition of “harmful content,” which resulted in many small websites closing down as they struggled to meet the regulatory requirements.

In Australia, a new policy prohibits those under 16 from using social media, even with parental consent, as part of the Online Safety Amendment (Social Media Minimum Age) Act 2024. This legislation, effective immediately, grants regulators the authority to impose fines up to A$50 million on companies that fail to prevent minors from accessing their platforms. The European Union is considering similar bans. Meanwhile, France has instituted a law requiring age verification for websites with pornographic material, facing protests from adult website operators.

Indicators suggest that such legislation may indeed be effective. The UK’s regulatory body, Ofcom, recently fined AVS Group, which runs 18 adult websites, £1 million for not implementing adequate measures to restrict children’s access. Other companies are being urged to enhance their efforts to comply with these new regulations.

Concerns surrounding the use of technology for age verification are growing, with some sites utilizing facial recognition tools that can be tricked with screenshots of video game characters. Moreover, VPNs allow users to masquerade as being from regions without strict age verification requirements. Following the onset of the OSA, search attempts for VPNs have surged, with reports indicating as much as a 1800% increase in daily registrations following the law’s implementation. The most prominent adult site experienced a 77% decline in UK visitors in the aftermath of the OSA, as users changed their settings to appear as if they were located in countries where age verification isn’t enforced.

The Children’s Commissioner for England emphasized that these loopholes need to be addressed and has made recommendations for age verification measures to prevent children from using VPNs. Despite this, many argue that such responses address symptoms rather than the root of the problem. So, what is the appropriate course of action?

Andrew Coun, a former member of Meta and TikTok’s safety and moderation teams, opines that harmful content isn’t deliberately targeted at children. Instead, he argues that algorithms aim to maximize engagement, subsequently boosting ad revenue. This creates skepticism regarding the genuine willingness of tech companies to protect kids, as tighter restrictions could harm their profits.

“It’s exceedingly unlikely that they will prioritize compliance,” he remarked, noting the inherent conflict between their interests and public welfare. “Ultimately, profits are a primary concern, and they will likely fulfill only the minimum requirements to comply.”

Graham Murdoch, a researcher at Loughborough University, believes the surge in online safety regulations will likely yield disappointment, as policymaking typically lags behind the rapid advancements of technology firms. He advocates for the establishment of a national internet service complete with its own search engine and social platforms, guided by a public charter akin to that of the BBC.

“The Internet should be regarded as a public service because of the immense value it offers to everyday life,” Murdoch stated. “We stand at a pivotal moment; if decisive action isn’t taken soon, returning to our current trajectory will be impossible.”

Topics:

Source: www.newscientist.com