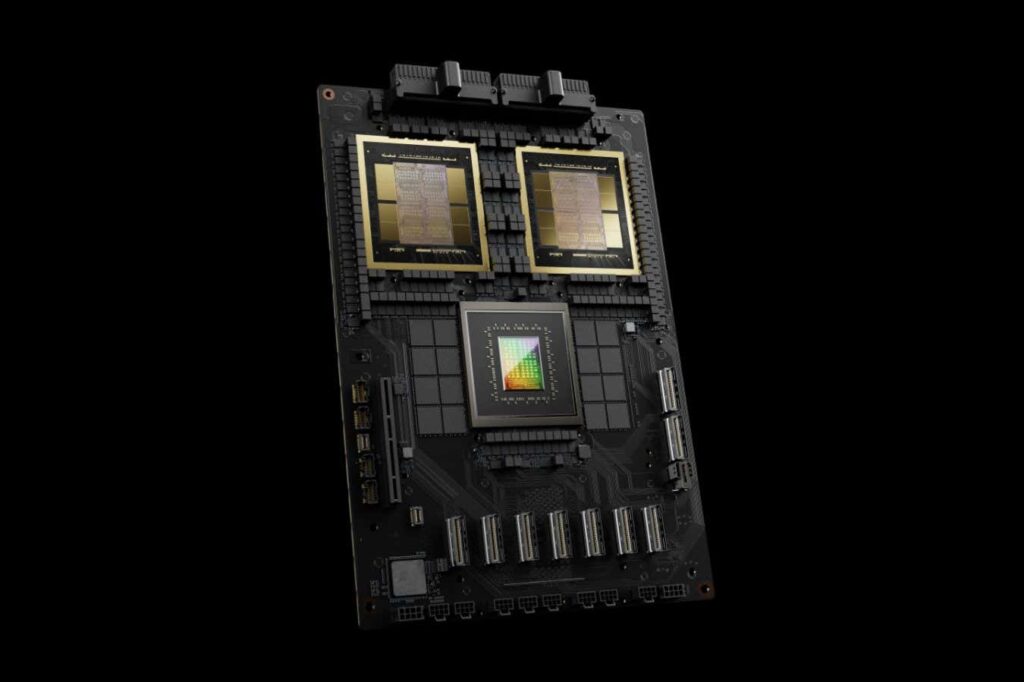

Nvidia GB200 Grace Blackwell Super Chip

Nvidia

Nvidia has announced the most powerful “superchip” it has ever produced for training artificial intelligence models. The U.S. computing company, whose value has recently soared to become the world's third-largest company, has not yet disclosed the price of its new chips, but observers say they will be available to a small number of organizations.

The chip was announced by NVIDIA CEO Jensen Huang at a press conference on March 18 in San Jose, California. He showed off the company's new Blackwell B200 graphics processing unit (GPU). Each GPU is equipped with his 208 billion transistors, the tiny switches at the heart of modern computing devices, compared to his 80 billion transistors in Nvidia's current generation Hopper chips. He also revealed the GB200 Grace Blackwell Superchip, which combines two B200 chips.

“Blackwell will be a great system for generative AI,” Huang said. “And in the future, data centers will be thought of as AI factories.”

GPUs have become coveted hardware for any organization looking to train large-scale AI models. During his AI chip shortage in 2023, Elon Musk said his GPUs were “a lot harder to get than drugs,” and some academic researchers without access lamented that “GPUs are poor.” I did.

Nvidia says its Blackwell chips deliver 30x performance improvements compared to Hopper GPUs when running generative AI services based on large language models such as GPT-4, while consuming 25x less power. It claims to be 1 in 1.

OpenAI's GPT-4 large-scale language model required approximately 8,000 Hopper GPUs and 15 megawatts of power to run 90 days of training, whereas the same AI training could be performed using just 2,000 Blackwell GPUs. The company says it can run on 4 megawatts of electricity.

The company has not yet revealed the cost of its Blackwell GPUs, but given that Hopper GPUs already cost between $20,000 and $40,000 each, their prices could reach eye-watering levels. expensive. The focus on developing more powerful and expensive chips means they will be “available only to a select few organizations and countries,” he said. sasha ruccioni At Hugging Face, a company that develops tools to share AI code and datasets. “Aside from the environmental impact of this already highly ene… Read more

Power demand from data center expansion, driven primarily by the generative AI boom, is expected to double by 2026 and rival Japan's current energy consumption. If data centers that support AI training continue to rely on fossil fuel power plants, they may also be accompanied by a sharp increase in carbon emissions.

Global demand for GPUs also means more geopolitical complications for Nvidia, as tensions and strategic competition between the US and China increase. The U.S. government has instituted export controls on advanced chip technology to slow China's AI development efforts, saying it is critical to U.S. national security, so Nvidia is seeking performance improvements for Chinese customers. They are forced to produce lower versions of chips.

topic:

Source: www.newscientist.com