JAmes Muldoon is a lecturer in management at the University of Essex, Mark Graham is a professor at the Oxford Internet Institute and Callum Cantt is a senior lecturer at the Essex Business School. They Fair Worka project to evaluate working conditions in the digital workplace, of which they are co-authors. Feeding the Machine: The Hidden Human Labor that Powers AI.

Why did you write that book?

James Muldoon: The idea for the book came from field research that we did in Kenya and Uganda about the data annotation industry. We spoke to a lot of data annotators, and the working conditions were just horrible. And we thought this was a story that everyone needed to hear: people working for less than $2 an hour on precarious contracts, and work that’s largely outsourced to countries in the global south because of how hard and dangerous the work is.

Why East Africa?

Mark Graham: I started my research in East Africa in 2009, working on the first of many undersea fiber optic cables that would connect East Africa with the rest of the world. The focus of my research was what this new connectivity would mean for the lives of workers in East Africa.

How did you gain access to these workplaces?

Mark Graham: The basic idea of Fair Work is to establish fair labor principles and then rate companies on those principles. We give companies a score out of 10. Companies in Nairobi and Uganda opened up to us because we were trying to give them a score and they wanted a better score. We went to them with a zero out of 10 and said, “Here’s what we need to do to improve.”

Will the company accommodate me? Will they dispute your low score?

Mark Graham: We get a variety of responses. Some people will argue that what we’re asking for is simply not possible. They’ll say, “It’s not our responsibility to do these things,” and so on. The nice thing about the score is that we can point out other companies that are doing the same thing. We can say, “Look, this company is doing it. What’s wrong with your company? Why can’t you offer these terms to your employees?”

Can you talk about the reverberations of colonialism that you found in this data work?

Mark Graham: The East African Railway once ran from Uganda to the port of Mombasa. It was funded by the British government and was basically used to extract resources from East Africa. What’s interesting about the East African fiber optic connection is that it runs along a very similar route to the old railway, and again, it’s an extractive technology.

Could you please explain the concept of the “extractor”?

Callum Cant: When we look at AI products, we tend to think of them as something that is relatively organically created, and we don’t think about the human effort, the resource requirements, and all the other things that go on behind the scenes.

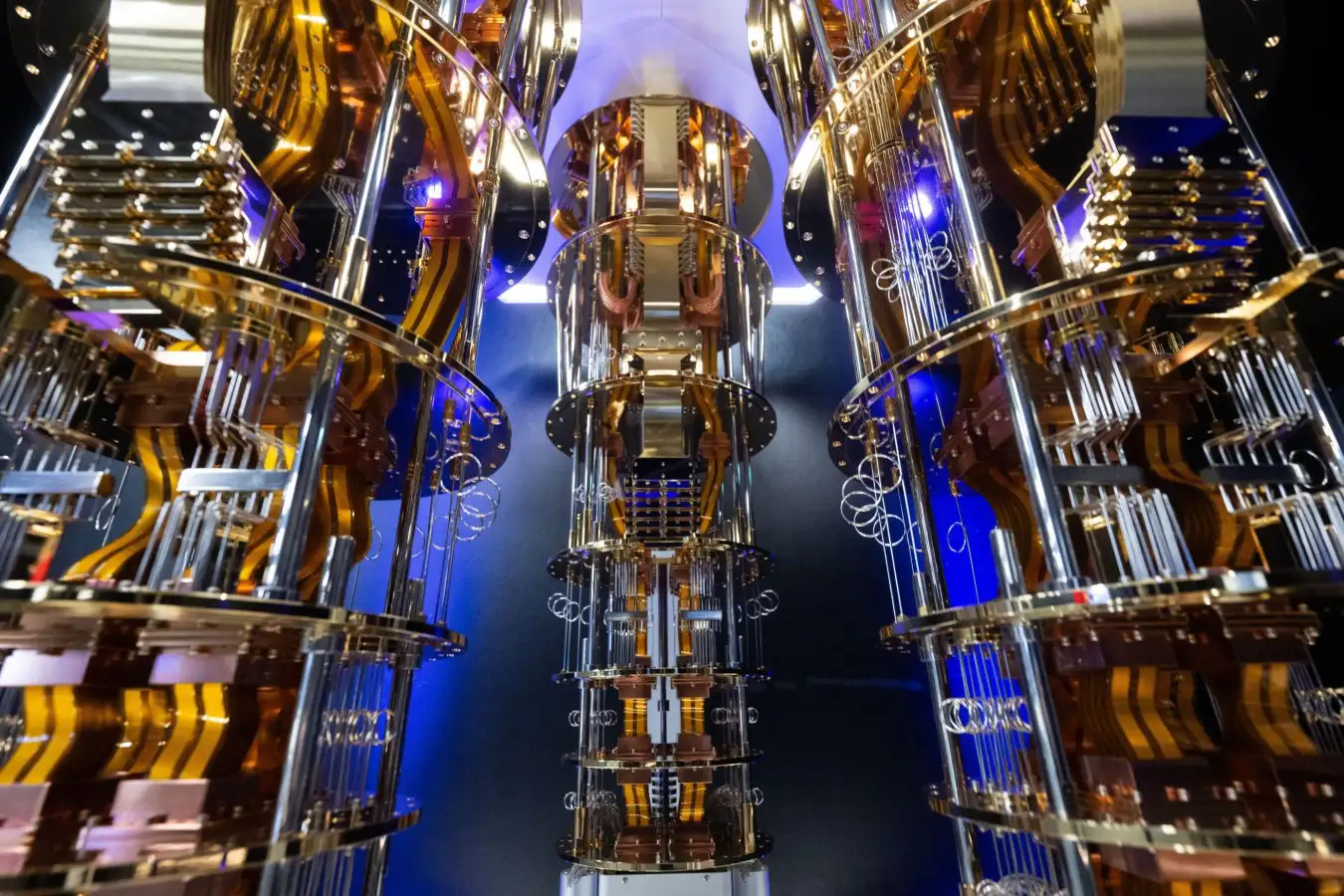

For us, the extractor is a metaphor that invites us to think more deeply about whose labor, whose resources, whose energy, whose time went into the process. This book is an attempt to look beyond the superficial appearance of sleek webpages and images of neural networks to really see the embodied reality of what AI looks like in the workplace and how it interacts with people.

James Muldoon: I think a lot of people would be surprised to know that 80% of the work behind AI products is actually data annotation, not machine learning engineering. If you take self-driving cars as an example, one hour of video data requires 800 hours of human data annotation. So it’s a very intensive form of work.

How does this concept differ from Shoshana Zuboff’s idea of surveillance capitalism?

James Muldoon: Surveillance capitalism best describes companies like Google and Facebook, which make their money primarily through targeted advertising. It’s an apt description of the data-to-ads pipeline, but it doesn’t really capture the broader infrastructural role that Big Tech now plays. The Extraction Machine is an idea we came up with to talk more broadly about how Big Tech profits from the physical and intellectual labor of humans, whether they’re Amazon employees, creatives, data annotators, or content moderators. It’s a much more visceral, political, and global concept of how all of our labor is exploited and extracted by these companies.

A lot of the concerns about AI have been about existential risks, or whether the technology could reinforce inequalities or biases that exist in the data it was trained on, but are you arguing that just introducing AI into the economy will create a whole range of other inequalities?

Callum Cant: You can see this very clearly in a workplace like Amazon. Amazon’s AI systems, the technology that orchestrates its supply chain, automate thought processes, and what humans have to do in Amazon’s warehouses is grueling, repetitive, high-stress labor processes. You get technology that is meant to automate menial tasks and create freedom and time, but in reality, the introduction of algorithmic management systems in the workplace means people are forced into more routine, boring, low-skilled jobs.

Callum Kant of Fair Work argues that Amazon’s system creates a “repetitive and burdensome” work process for employees. Photo: Beata Zawrzel/NurPhoto via Getty Images

In one chapter of the book, Irish actress Chloe discovers that someone is using an AI copy of her voice, similar to the recent dispute between Scarlett Johansson and OpenAI: She has the platform and the funds to challenge this situation, whereas most people do not.

Callum Cant: Many of the solutions actually rely on collective power, not individual power, because, like anyone else, we have no power to tell OpenAI what to do. OpenAI doesn’t care if some authors think they’re running an information extraction regime. These companies are funded by billions of pounds and shouldn’t care what we think about them.

But we have identified some ways that, collectively, we can begin to resist and try to change the way this technology is being deployed, because I think we all recognize that there is a potential for liberation here. But getting to it is going to require a huge amount of collaboration and conflict in a lot of places. Because while there are people who are getting enormously wealthy from this technology, the decisions made by a very small number of people in Silicon Valley are making all of us worse off. And I don’t think a better form of technology is going to come out of that unless we force them to change the way they do things.

Is there anything you would like to say to our readers? What actions can they take?

Callum Cant: It’s hard to give one universal piece of advice because people are all in very different positions. If you work in an Amazon warehouse, organize your coworkers and exert influence over your boss. If you work as a voice actor, you need to organize with other voice actors. But everyone has to deal with this in their own situation, so it’s impossible to make a diagnosis.

We are all customers of large tech companies: should we boycott Amazon, for example?

Callum Cant: I think organizing in the workplace is more powerful, but there is also a role for organizing as consumers. If there are clear differences and opportunities where you can make better use of consumption, especially if the workers involved are calling for it, then by all means, do so. For example, if Amazon workers call for a boycott on Black Friday, we would encourage people to listen. Absolutely. But no matter where people take action and what actions they take, they need a set of principles to guide them. One of the key principles is that collective action is the primary path forward.

Source: www.theguardian.com