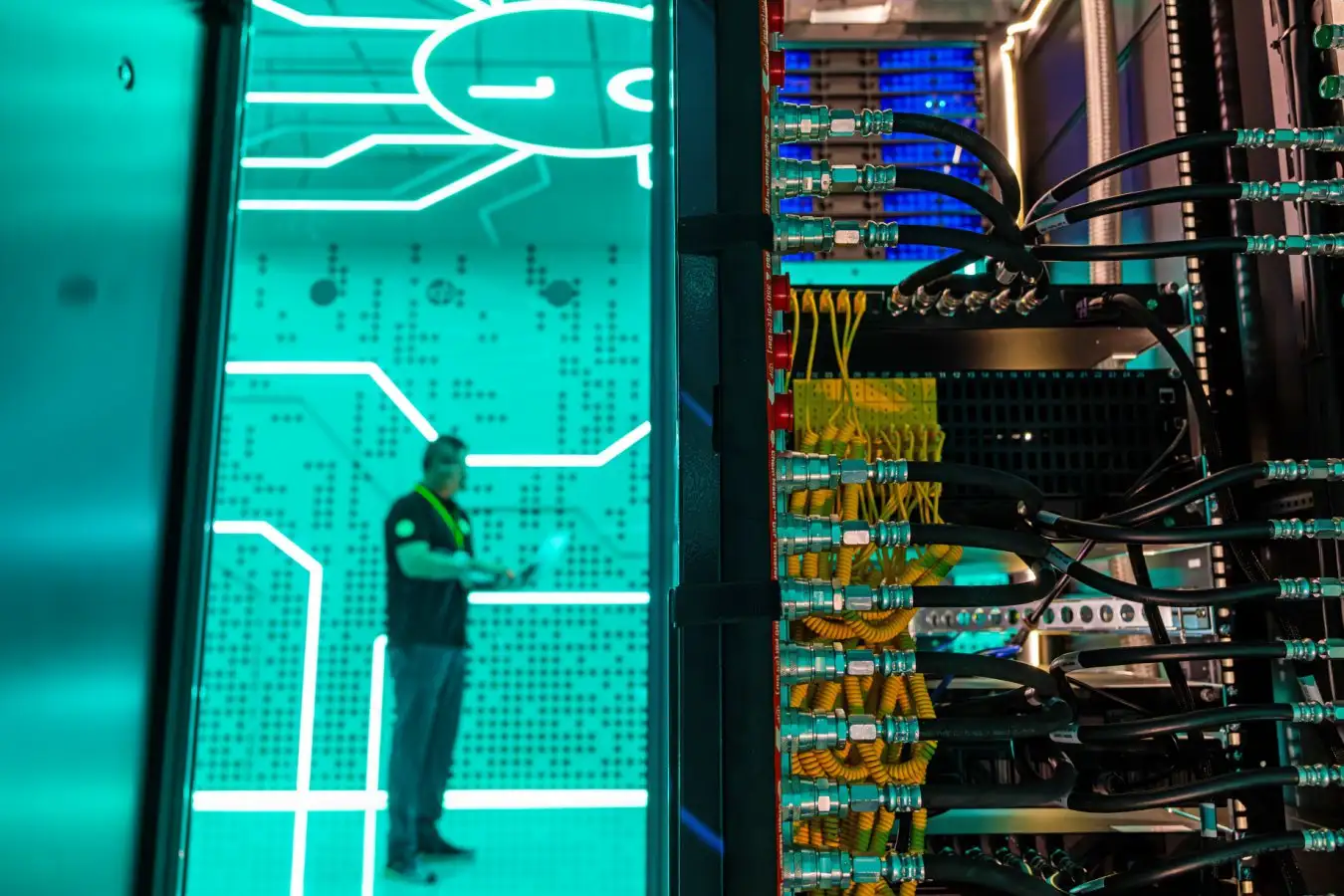

AI relies on data centers that consume a significant amount of energy

Jason Alden/Bloomberg/Getty

Optimizing the choice of AI models for various tasks could lead to an energy saving of 31.9 terawatt-hours this year alone, equivalent to the output of five nuclear reactors.

Thiago da Silva Barros from France’s Cote d’Azur University examined 14 distinct tasks where generative AI tools are utilized, including text generation, speech recognition, and image classification.

We investigated public leaderboards, such as those provided by the machine learning platform Hugging Face, to analyze the performance of various models. The energy efficiency during inference—when an AI model generates a response—was assessed using a tool named CarbonTracker, and total energy consumption was estimated by tracking user downloads.

“We estimated the energy consumption based on the model size, which allows us to make better predictions,” states da Silva Barros.

The findings indicate that by switching from the highest performing model to the most energy-efficient option for each of the 14 tasks, energy usage could be decreased by 65.8%, with only a 3.9% reduction in output quality. The researchers believe this tradeoff may be acceptable to most users.

Some individuals are already utilizing the most energy-efficient models, suggesting that if users transitioned from high-performance models to the more economical alternatives, overall energy consumption could drop by approximately 27.8%. “We were taken aback by the extent of savings we uncovered,” remarks team member Frédéric Giroir from the French National Center for Scientific Research.

However, da Silva Barros emphasizes that changes are necessary from both users and AI companies. “It’s essential to consider implementing smaller models, even if some performance is sacrificed,” he asserts. “As companies develop new models, it is crucial that they provide information regarding their energy consumption patterns to help users assess their impact.”

Some AI firms are mitigating energy usage through a method known as model distillation, where a more extensive model trains a smaller, more efficient one. This approach is already showing significant benefits. Chris Priest from the University of Bristol, UK notes that Google recently claimed an advance in energy efficiency: 33 times more efficient measures with their Gemini model within the past year.

However, allowing users the option to select the most efficient models “is unlikely to significantly curb the energy consumption of data centers, as the authors suggest, particularly within the current AI landscape,” contends Priest. “By reducing energy per request, we can support a larger customer base more rapidly with enhanced inference capabilities,” he adds.

“Utilizing smaller models will undoubtedly decrease energy consumption in the short term, but various additional factors need consideration for any significant long-term predictions,” cautions Sasha Luccioni from Hugging Face. She highlights the importance of considering rebound effects, such as increased usage, alongside broader social and economic ramifications.

Luccioni points out that due to limited transparency from individual companies, research in this field often relies on external estimates and analyses. “What we need for more in-depth evaluations is greater transparency from AI firms, data center operators, and even governmental bodies,” she insists. “This will enable researchers and policymakers to make well-informed predictions and decisions.”

topic:

Source: www.newscientist.com