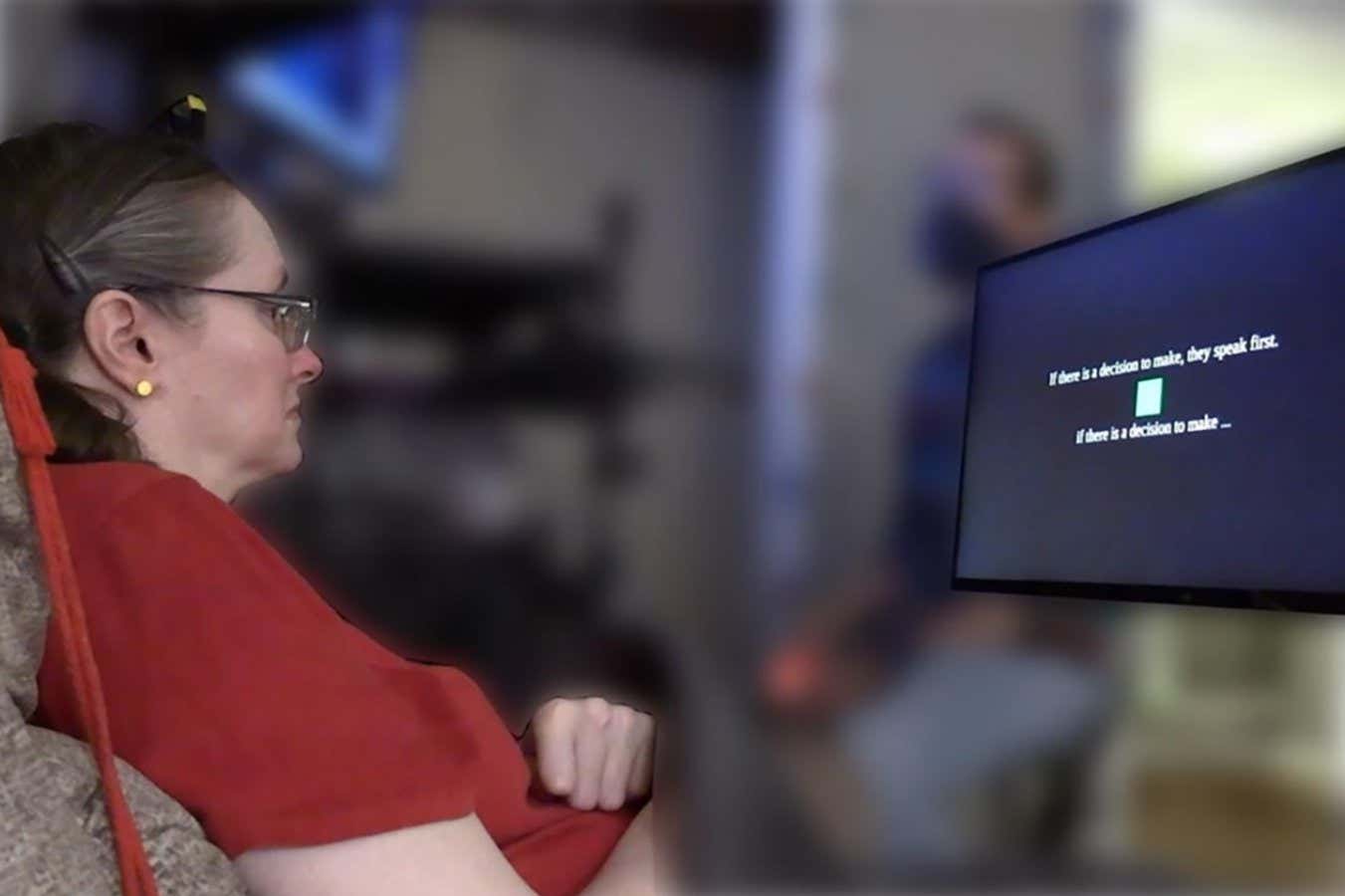

Individuals with paralysis utilizing a brain-computer interface. The text above serves as a prompt, while the text below is decoded in real-time as she envisions speaking the phrase.

Emory BrainGate Team

A person with paralysis can convert their thoughts into speech just by imagining what they want to say.

The brain-computer interface can already interpret the neural activity of a paralyzed individual when attempting to speak physically, but this requires significant effort. Therefore, Benyamin Meschede-Krasa from Stanford University and his team explored a less effort-intensive method.

“We aimed to determine if there was a similar pattern when individuals imagined speaking internally,” he notes. “Our findings suggest this could be a more comfortable method for people with paralysis to use the system to regain their ability to communicate.”

Meschede-Krasa and his colleagues enlisted four participants with severe paralysis due to either amyotrophic lateral sclerosis (ALS) or brainstem stroke. All had previously had microelectrodes implanted in motor areas linked to speech for research purposes.

Researchers instructed participants to list words and sentences and to visualize themselves saying them. They discovered that the brain activity mirrored that of actual speech; however, the activation signal was typically weaker during the imagined speech.

The team trained AI models to interpret and decode these signals utilizing a vocabulary database containing up to 125,000 words. To uphold the privacy of individuals’ thoughts, the models were programmed to activate only when a specific password, Chitty Chitty Bang Bang, was detected with 98% accuracy.

Through various experiments, the researchers found that the models could decode what was intended to be communicated correctly up to 74% of the time when spoken as a single word.

This demonstrates a promising application of the approach, though it is currently less reliable than systems that decode overt speech attempts, according to Frank Willett at Stanford. Ongoing enhancements to both the sensors and AI over the coming years may lead to greater accuracy, he suggests.

Participants reported a strong preference for this system, describing it as faster and less cumbersome compared to traditional speech-attempt based systems, as stated by Meschede-Krasa.

This notion presents an “interesting direction” for future brain-computer interfaces, remarks Maris Cavan Stencel in Utrecht, Netherlands. However, she points out the need for a distinction between genuine speech and the thoughts individuals may not necessarily wish to share. “I have doubts about whether anyone can truly differentiate between these types of mental speech and attempted speech,” she adds.

She further mentions that the mechanism requires activation and deactivation to ascertain if the user intends to articulate their thoughts. “It is crucial to ensure that brain-computer interface-generated communications are conscious expressions individuals wish to convey, rather than internal thoughts they wish to keep private,” she states.

Benjamin Alderson Day from Durham University in the UK argues that there’s no reason to label the system as a mind reader. “It effectively addresses very basic language constructs,” he explains. “Though it may seem alarming if thoughts are confined to single terms like ‘tree’ or ‘bird,’ we are still a long way from capturing the full range of individuals’ thoughts and their most intimate ideas.”

Willett underscores that all brain-computer interfaces are governed by federal regulations, ensuring adherence to the “highest standards of medical ethics.”

Topic:

- Artificial Intelligence/

- Brain

Source: www.newscientist.com