The multidisciplinary team discovered that AI models, and Transformer in particular, process memories in a manner similar to the hippocampus in the human brain. This breakthrough suggests that applying neuroscience principles like NMDA receptors to AI can improve memory function, advance the field of AI, and provide insight into human brain function. doing. Credit: SciTechDaily.com

Researchers have discovered that memory consolidation processes in AI are similar to those in the human brain, particularly the hippocampus, opening the door to advances in AI and a deeper understanding of human memory mechanisms.

The interdisciplinary team, comprised of researchers from the Center for Cognition and Sociality and researchers from the Data Science Group within the Institute of Basic Sciences (IBS), will study memory processing in artificial intelligence (AI) models and the hippocampus and hippocampus of the human brain. revealed that there are striking similarities between the two. This new discovery provides a new perspective on memory consolidation, the process of converting short-term memory into long-term memory in AI systems.

Evolving AI through understanding human intelligence

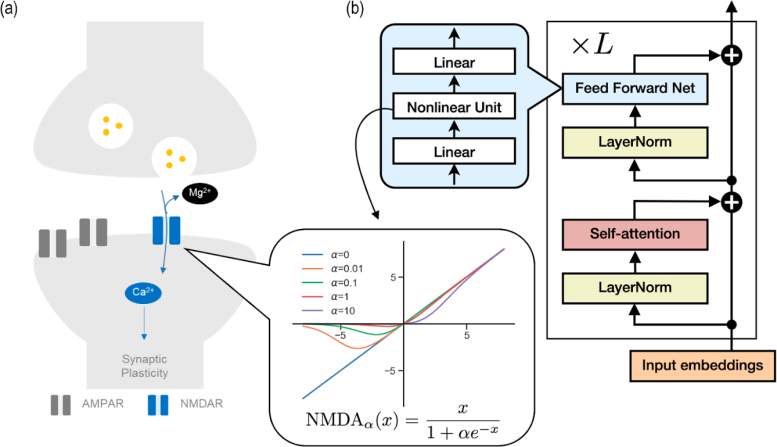

Understanding and replicating human-like intelligence has become a key research focus in the race to develop artificial general intelligence (AGI), led by influential organizations such as OpenAI and Google DeepMind. At the heart of these technological advances is the Transformer model. [Figure 1]its fundamental principles are now being explored in new depths.

Figure 1. (a) Diagram showing ion channel activity in a postsynaptic neuron. AMPA receptors are involved in the activation of postsynaptic neurons, while NMDA receptors are blocked by magnesium ions (Mg2⁺), whereas calcium ions (Ca2⁺) are activated when postsynaptic neurons are fully activated. Induces synaptic plasticity through influx. (b) Flow diagram representing the computational process within the Transformer AI model. Information is processed sequentially through stages such as feedforward layer, layer normalization, and self-attention layer. The graph showing the current vs. voltage relationship for the NMDA receptor is very similar to the nonlinearity of the feedforward layer. Input-output graphs based on magnesium concentration (α) show nonlinear changes in NMDA receptors.Credit: Basic Science Research Institute

Brain learning mechanism applied to AI

The key to powerful AI systems is understanding how they learn and remember information. The research team focused on the learning principles of the human brain, particularly memory consolidation via the NMDA receptors in the hippocampus, and applied them to the AI model.

NMDA receptors are like smart doors in the brain that facilitate learning and memory formation. The presence of a brain chemical called glutamate excites nerve cells. Magnesium ions, on the other hand, act as small gatekeepers that block the door. Only when this ionic gatekeeper steps aside can substances flow into the cell. This is the process by which the brain creates and retains memories, and the role of the gatekeeper (magnesium ions) in the whole process is very specific.

AI models that mimic human brain processes

The research team made an interesting discovery. The Transformer model appears to use a gatekeeping process similar to the brain’s NMDA receptors. [see Figure 1]. This discovery led the researchers to investigate whether the consolidation of Transformer memories could be controlled by a mechanism similar to the NMDA receptor gating process.

In animal brains, low magnesium levels are known to impair memory function. Researchers have discovered that mimicking NMDA receptors can improve long-term memory in transformers. Similar to the brain, where changes in magnesium levels affect memory, tweaking the transformer parameters to reflect NMDA receptor gating improved memory in the AI model. This breakthrough suggests that established knowledge from neuroscience can explain how AI models learn.

Expert insights on AI and neuroscience

“This research is an important step in the advancement of AI and neuroscience,” said C. Justin Lee, the institute’s director and neuroscientist. This will allow us to delve deeper into how the brain works and develop more advanced AI systems based on these insights.

CHA Meeyoung is a data scientist on the team.

kaist

says, “The human brain is remarkable in that it operates on minimal energy, unlike large-scale AI models that require vast amounts of resources. It opens up new possibilities for low-cost, high-performance AI systems that learn and remember information.”

Fusion of cognitive mechanisms and AI design

What makes this work unique is its commitment to incorporating brain-inspired nonlinearity into AI structures, representing a significant advance in simulating human-like memory consolidation. The fusion of human cognitive mechanisms and AI design not only enables the creation of low-cost and high-performance AI systems, but also provides valuable insights into the workings of the brain through AI models.

Source: scitechdaily.com