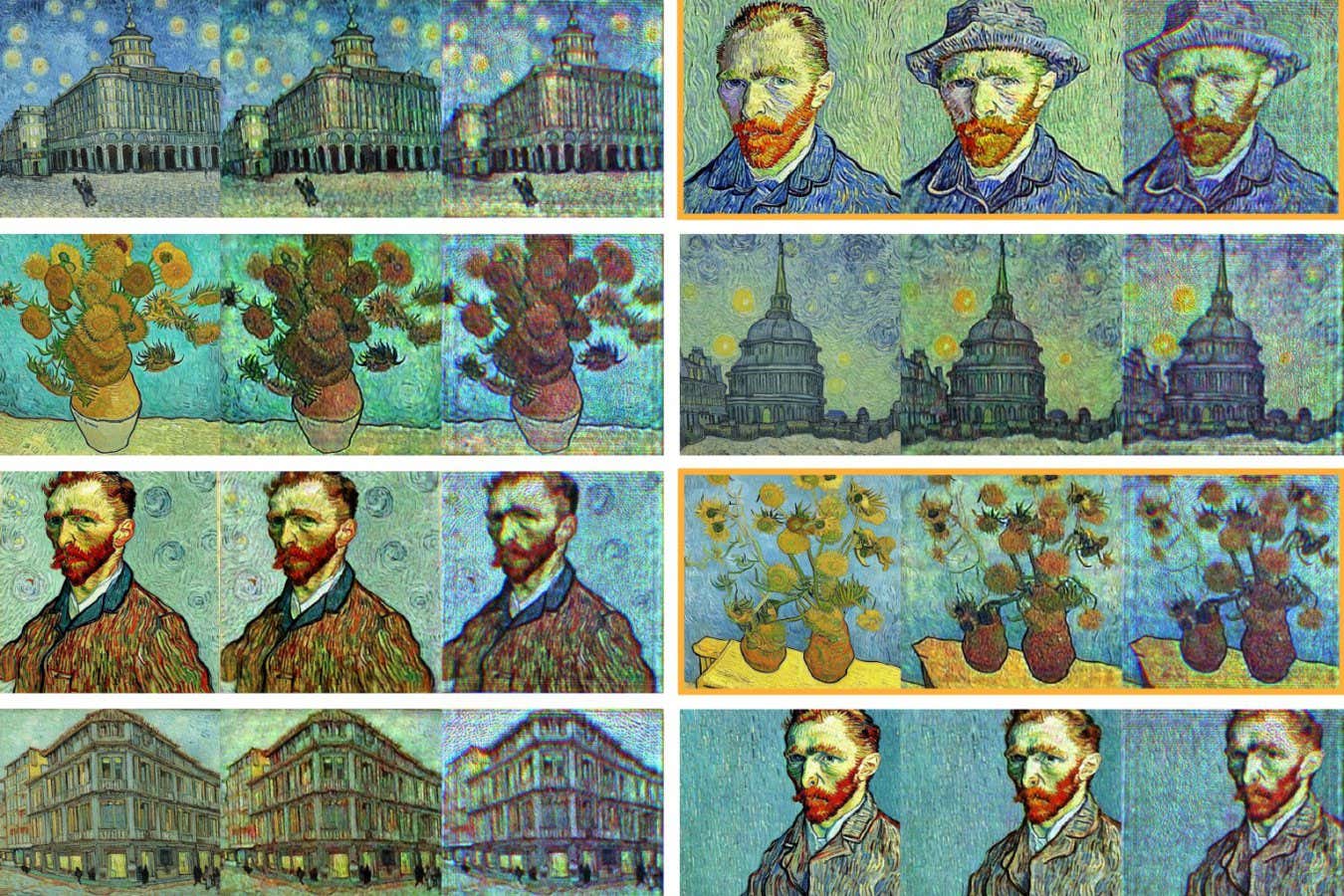

Vibrant Vincent van Gogh-inspired artwork generated by a traditional diffusion model (left in each set of three) and an optical image generator (right).

Shiqi Chen et al. 2025

AI image generators that utilize light for image creation instead of conventional computing hardware can consume significantly more energy—potentially hundreds of times greater.

When an AI model generates an image from text, it typically employs a method known as diffusion. The AI first presents a collection of vast datasets, demonstrates how to use statistical noise to disrupt them, and then encodes these patterns using a specific set of rules. For a new noisy image, these rules can be applied to reverse the process, with several steps resulting in a coherent image that aligns with a specific text prompt.

For producing realistic, high-resolution images, diffusion requires numerous sequential steps that depend on considerable computational power. In April, OpenAI reported that its new image generator created more than 700 million images within its first week. Achieving this scale requires an enormous amount of energy and water to both power and cool the machinery supporting the model.

Now, Aydogan Ozcan at the University of California, Los Angeles, along with his colleagues, has designed a diffusion-based image generator that operates using light beams. The encoding phase is digital and requires minimal energy, while the decoding phase is wholly optical and demands no computational resources.

“Unlike traditional digital diffusion models that need hundreds or thousands of iterative steps, this method accomplishes image generation with snapshots and requires no additional calculations beyond the initial encoding,” states Ozkan.

The system initially utilizes a digital encoder trained on a publicly available image dataset. This mechanism can produce patterns that can be transformed into images. This encoder then engages a liquid crystal display known as a spatial light modulator (SLM) to physically imprint the static laser beam. When the laser beam travels through a second decoding SLM, the desired image is instantly produced on the screen recorded by the camera.

Ozkan and his team employed this system to generate black-and-white images of simple objects, such as the digits 1-9, to test the diffusion model, as well as vivid images in the style of Vincent van Gogh. The outcomes appeared to be comparably similar to those generated by conventional image synthesis methods.

“This might represent the first instance of optical neural networks serving as a computational tool that can produce not only lab-based demos but also practical results,” remarks Alexander Lvovsky from Oxford University.

For the Van Gogh-inspired images, the system consumed merely a few millijoules of energy per image, primarily for the liquid crystal screens, contrasting sharply with the hundreds or thousands of joules necessary for conventional diffusion models. “This indicates that the latter consumes energy equivalent to that of an electric kettle, while the optical system only uses millionths of a joule,” notes Lvovsky.

The system will need modifications to function in a data center environment compared to widely adopted image generation tools, but Ozcan believes it could also be suitable for portable electronics like AI glasses, thanks to its low energy demands.

Topic:

Source: www.newscientist.com