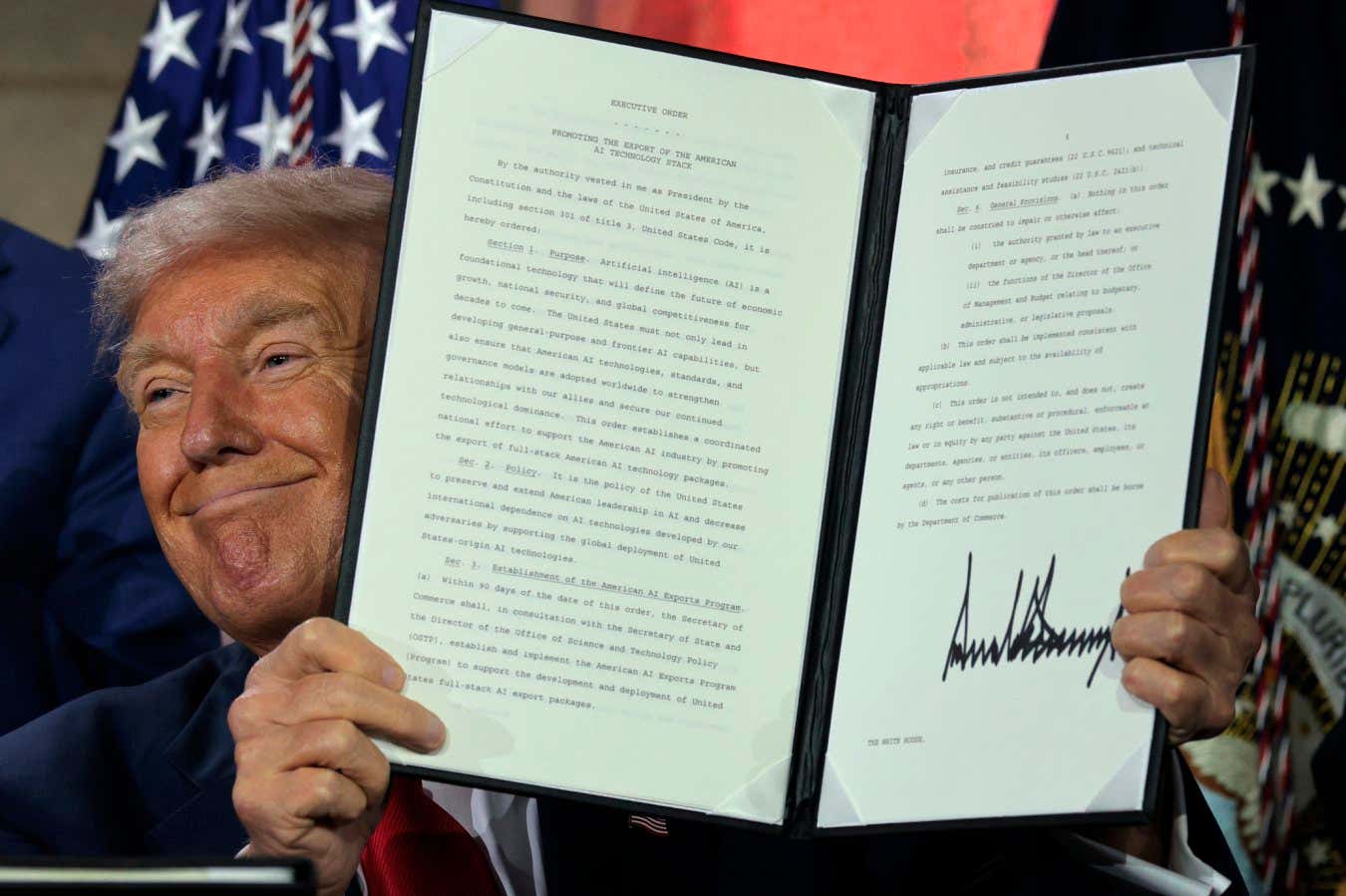

US President Donald Trump Presents Executive Order at the AI Summit on July 23, 2025 in Washington, DC

Chip Somodevilla/Getty Images

President Donald Trump aims to ensure that federal contracts are awarded only to artificial intelligence developers “free from ideological bias.” However, these new stipulations could allow his administration to impose a specific worldview on AI models employed by high-tech firms, which may face significant obstacles and risks in adjusting their models accordingly.

“The notion that government contracts should be structured to ensure AI systems are ‘objective’ and free from top-down ideological bias raises important questions,” states Becca Branum from the Center for Democracy and Technology, a public policy nonprofit based in Washington, DC.

The Trump White House’s AI Action Plan suggests updating federal guidelines released on July 23rd. It proposes that the government only work with major language model (LLM) developers who guarantee their systems are objective and free from top-down ideological biases. On the same day, Trump signed an executive Presidential Order titled “Federal Government Stops AI.”

The AI Action Plan also advises the National Institute of Standards to revise the AI risk management framework to “remove references to misinformation, diversity, equity, inclusion, and climate change.” The Trump administration has already rolled back research into misinformation and halted DEI initiatives, rejecting researchers’ involvement with the US National Climate Assessment Report in response to a Republican-backed bill aimed at cutting clean energy funding.

“If governments impose their worldviews on the developers and users of these systems, AI cannot genuinely be seen as being ‘top-down biased,’” adds Branum. “These vague standards are prone to misuse.”

Currently, AI developers seeking federal contracts must adhere to the Trump administration’s call for AI models free from “ideological bias.” Companies like Amazon, Google, and Microsoft have entered federal agreements to supply AI-enhanced cloud computing services to various government sectors, while Meta has developed its LLaMA AI model tailored for US government agencies involved in defense and national security efforts.

In July 2025, the US Department of Defense Chief Digital Office announced a new contract valued at up to $200 million awarded to companies like Google, OpenAI, and Elon Musk’s xAI. Notably, xAI’s inclusion comes after Musk’s controversial role in the Doge Task Force that led to widespread government job cuts. Recently, xAI’s chatbot Grok made headlines for expressing racist and anti-Semitic views, calling them “Mechahitler.” While no companies responded to inquiries from New Scientist, some have released general statements praising Trump’s initiative.

In any case, tech companies might struggle to align their AI models with the Trump administration’s ideological preferences, according to Paul Lotta from Bocconi University in Italy. This is due to the fact that popular AI chatbots, such as OpenAI’s ChatGPT, can inherit certain biases from the diverse internet data on which they are trained.

Research has shown that many AI chatbots from both US and Chinese developers share views aligning with those of liberal voters on issues like gender pay equity and the involvement of trans women in women’s sports, according to a study by Röttger and colleagues . While the reason for this pattern is unclear, the researchers suggest it might stem from a tendency among developers to train AI models based on broader principles such as truthfulness, fairness, and kindness, rather than intentionally embedding a liberal bias.

AI developers can “fine-tune the model to offer specific responses to particular queries,” thereby improving AI interactions based on user prompts. However, the model’s default perspectives and implicit biases remain pervasive, Röttger notes. Such methods could contradict standard AI training principles that prioritize truthfulness.

Moreover, US tech firms may risk alienating a significant portion of their global customer base if they attempt to synchronize their commercial AI models with the Trump administration’s worldview. “We’re curious to see how this evolves, especially as the US seeks to impose a specific ideology on global user models,” Röttger cautions. “This could lead to serious complications.”

AI models may strive for political neutrality if developers provide clearer insights into biases for each model or create a diverse collection of models that exhibit varying ideological perspectives, says Gillian Fisher at Washington University. However, “at this stage, achieving genuinely politically neutral AI models might be impossible due to the inherently subjective nature of neutrality and the multitude of human choices involved in building these systems,” she concludes.

Topics:

Source: www.newscientist.com