As a human, you will play a crucial role in identifying whether a photo or video was created using artificial intelligence.

Various detection tools are available for assistance, either commercially or developed in research labs. By utilizing these deepfake detectors, you can upload or link to suspected fake media, and the detector will indicate the likelihood that it was generated by AI.

However, relying on your senses and key clues can also offer valuable insights when analyzing media to determine the authenticity of a deepfake.

Although the regulation of deepfakes, especially in elections, has been slow to catch up with AI advancements, efforts must be made to verify the authenticity of images, audio, and videos.

One such tool is the Deepfake Meter developed by Siwei Lyu at the University at Buffalo. This free and open-source tool combines algorithms from various labs to help users determine if media was generated by AI.

The DeepFake-o-meter demonstrates both the advantages and limitations of AI detection tools by rating the likelihood of a video, photo, or audio recording being AI-generated on a scale from 0% to 100%.

AI detection algorithms can exhibit biases based on their training, and while some tools like DeepFake-o-meter are transparent about their variability, commercial tools may have unclear limitations.

Lyu aims to empower users to verify the authenticity of media by continually improving detection algorithms and encouraging collaboration between humans and AI in identifying deepfakes.

audio

A notable instance of a deepfake in US elections was a robocall in New Hampshire using an AI-generated voice of President Joe Biden.

When subjected to various detection algorithms, the robocall clips showed varying probabilities of being AI-generated based on cues like the tone of the voice and presence of background noise.

Detecting audio deepfakes relies on anomalies like a lack of emotion or unnatural background noise.

photograph

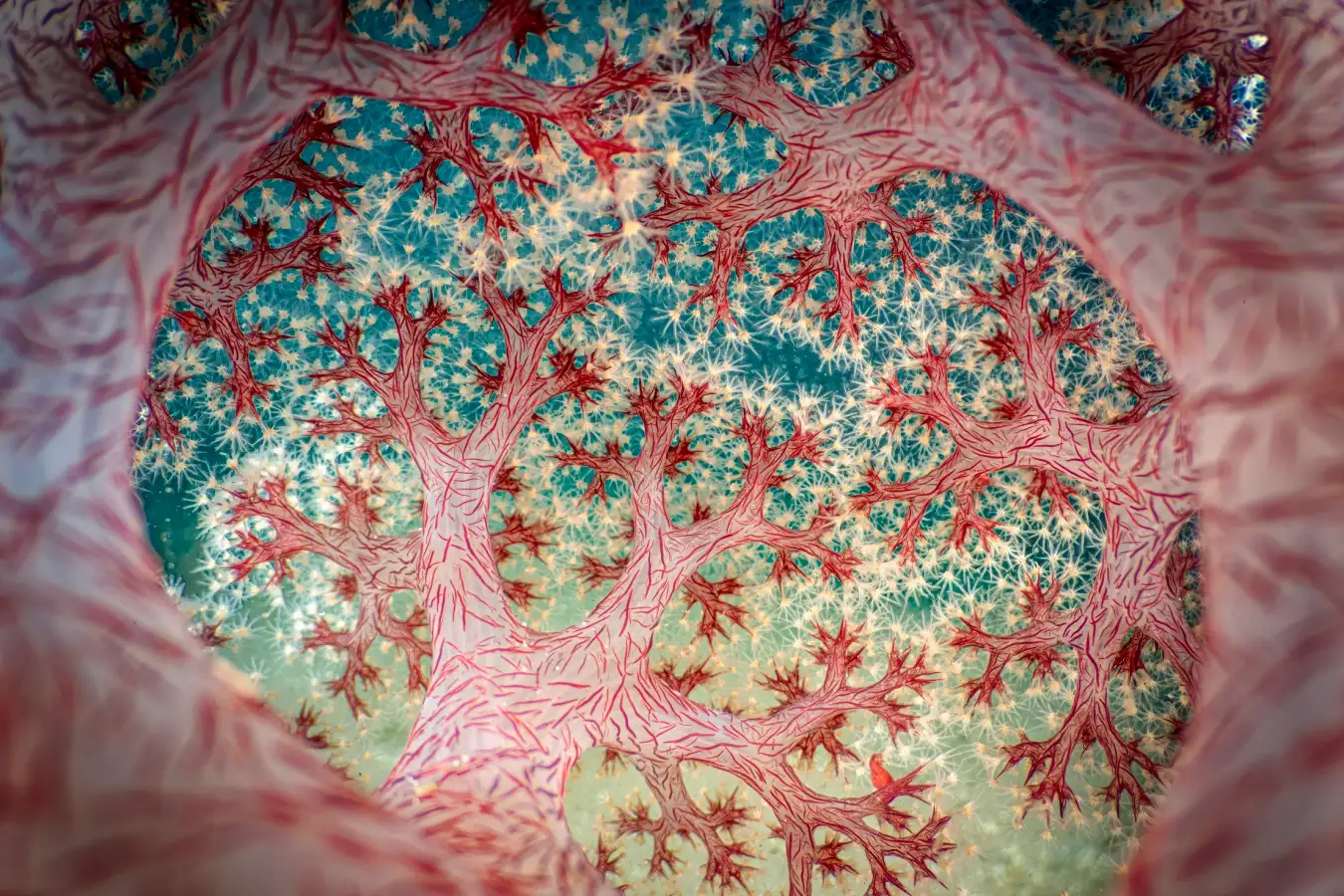

Photos can reveal inconsistencies with reality and human features that indicate potential deepfakes, like irregularities in body parts and unnatural glossiness.

Analyzing AI-generated images can uncover visual clues such as misaligned features and exaggerated textures.

Discerning the authenticity of AI-generated photos involves examining details like facial features and textures.

video

Video deepfakes can be particularly challenging due to the complexity of manipulating moving images, but visual cues like pixelated artifacts and irregularities in movements can indicate AI manipulation.

Detecting deepfake videos involves looking for inconsistencies in facial features, mouth movements, and overall visual quality.

The authenticity of videos can be determined by analyzing movement patterns, facial expressions, and other visual distortions that may indicate deepfake manipulation.

Source: www.theguardian.com